Code&Data Insights

[Deep Learning] Regularization - Dropout | Data Augmentation | Multitask Learning 본문

[Deep Learning] Regularization - Dropout | Data Augmentation | Multitask Learning

paka_corn 2023. 12. 10. 08:31Regularization

: techniques used to control the complexity of models and prevent overfitting are known as regularization techniques

=> Increase generalization ability!

=> Deep Neural Networks are models with large capacity and thus more prone to overfitting problems!!!

Data Augmentation

: create fake training data by applying some transformations to the original data

- Used for classficiation problems, moslty image and audio

=> augmentation applied at different levels of abstraction

Noise injection might also work when noise is applied to the hidden units

Multitask Learning

: divide the architecture into a shared one (early layers) and a task-specific one(last layers)

- input must be same!

=> The shared representation will be much more robust and general as trained with more data on different tasks.

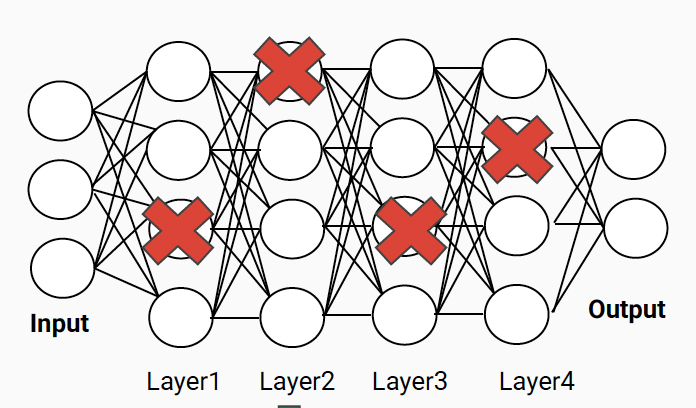

Dropout

: a regularization method that efficiently approximates training a large number of neural networks.

=> Dropout can be viewed as a cheap way to train ensemble models with an exponential number of architectures.

During training,

Some neurons are randomly ignored or dropped out

- neurons are temporarily removed from the networkd along with their input and output connections

- the probability of dropping a neuron is called dropout rate ρ

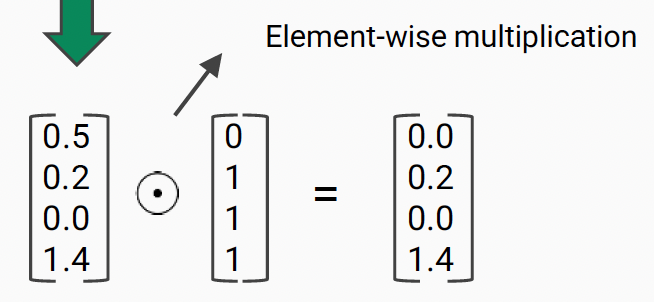

- One way to implement it is to sample a binary mask(that contains 0s and 1s) for each layer and multiply it with the output of the neurons

=> For every input sample, we sample a different mask

During testing,

- Dropout is not used at test time

- all the neurons are active, so we have to scale their outputs properly

=> we have a mismatch in the input scale between training and test! This might significantly harm the performance.

=> we can compensatef or the effect of dropout by multiplying each input by(1-p) at test time.