Code&Data Insights

[Machine Learning] Cross Validation | Confusion Matrix | ROC - AUC curves 본문

[Machine Learning] Cross Validation | Confusion Matrix | ROC - AUC curves

paka_corn 2023. 5. 13. 08:15[ Cross Validation ]

: Cross Validation allows us to compare different machine learning methods and get a sense of how well they will work in practice.

- K-Fold

( K could be arbitrary! )

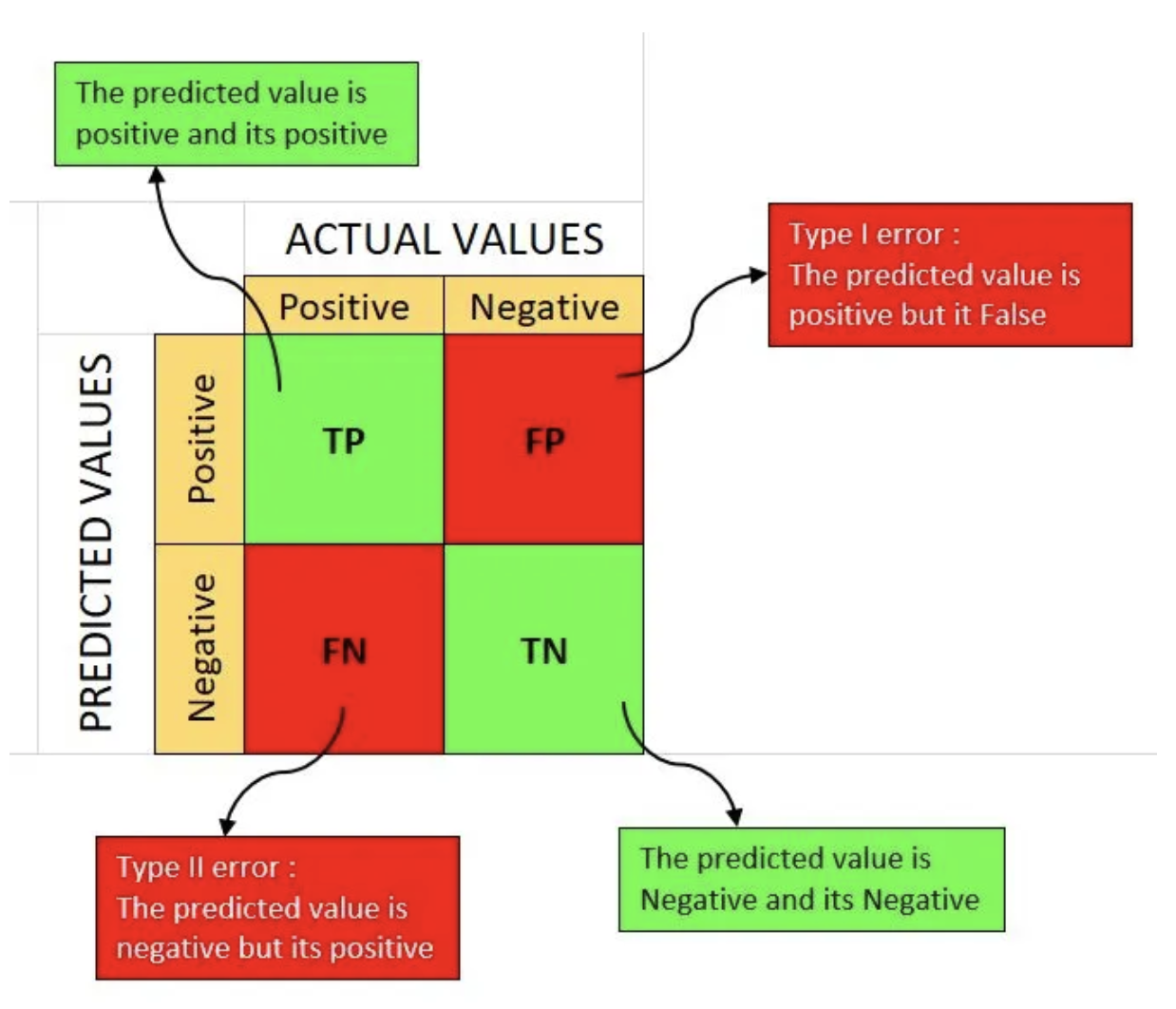

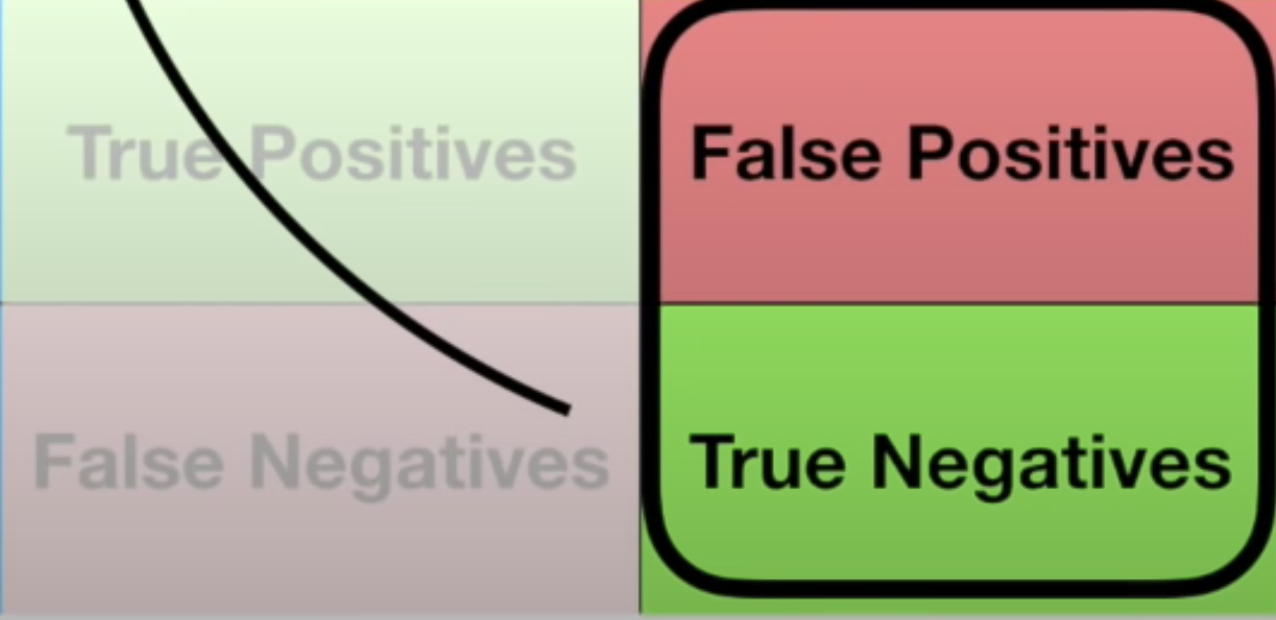

[ Confusion Matrix ]

Confusion Matrix : To decide which method should be performed with the given data sets, we need to summurize how each method performed on the testing Data.

=> one way to do this is by creating a Confusion Matrix for each method

Sensitivity, Specificity, ROC and AUC can help to make a decision for choosing best method

=> Type 1 Error : False Positive

=> Type 2 Error : False Negatvie

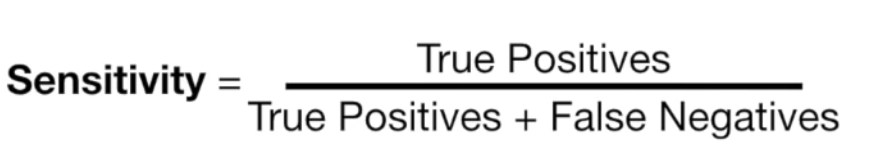

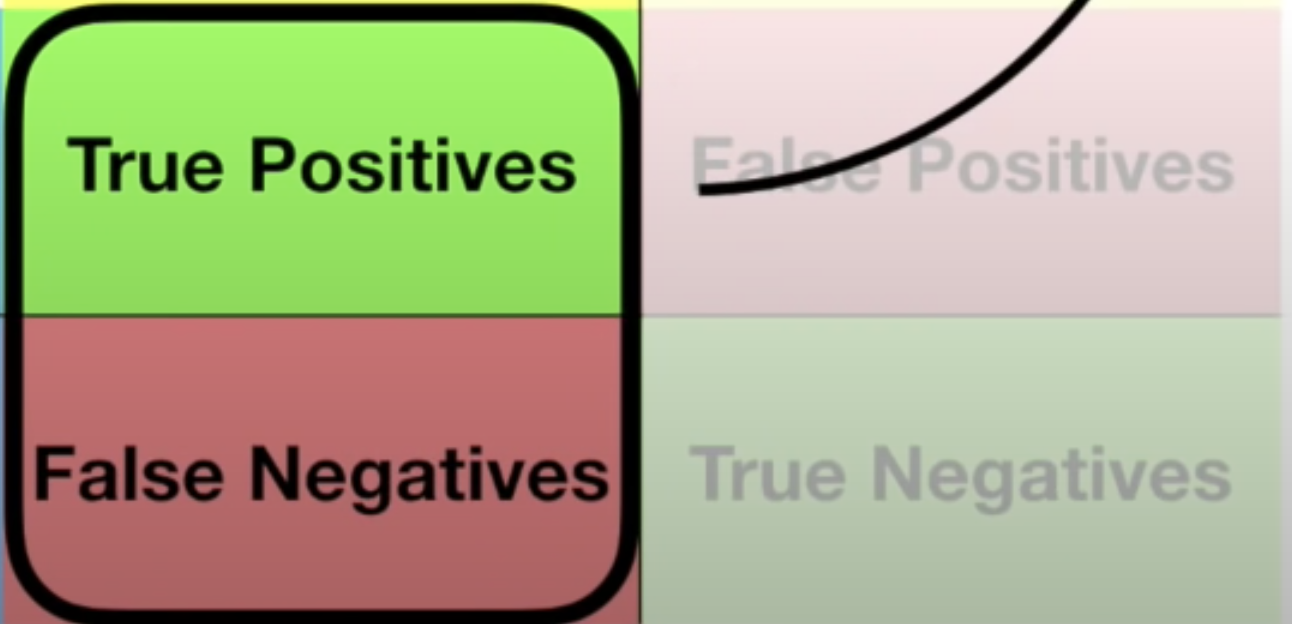

1) Sensitivity

Sensitivity - the percentage of how the data(TP) correctly identified

=> between different methods, the method which has higher sensetivity is slightly better at correctly identifying positives.

2) Specificity

Specificity - what percentage of data (TN) correctly identified

=> between different methods, the method which has higher specificity is slightly better at correctly identifying negatives.

Therefore, we could decide which machine learning method would be best for our data depending on what we want to focus on wheter positives or negatives by using Sensitivity and Specificity.

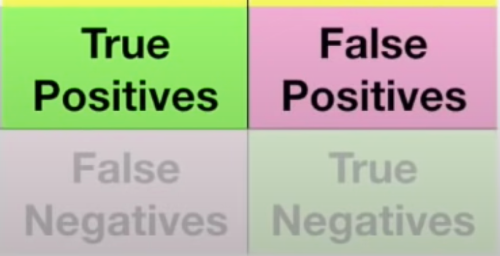

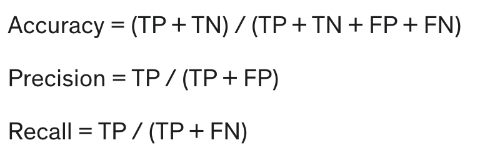

3) Precision

=> measuring exactness

- the proportion of positive results that were correctly classified

- Can be used instead of ROC!!!

- does not include the number of TN, and is not effected by the imbalance

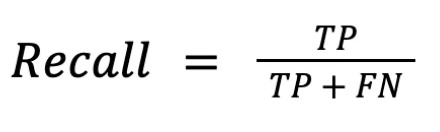

4) Recall

=> measuring completeness

- Recall is known as the true positive rate (TPR), is the percentage of data samples that a machine learning model correctly identifies as belonging to a class of interest—the “positive class”—out of the total samples for that class.

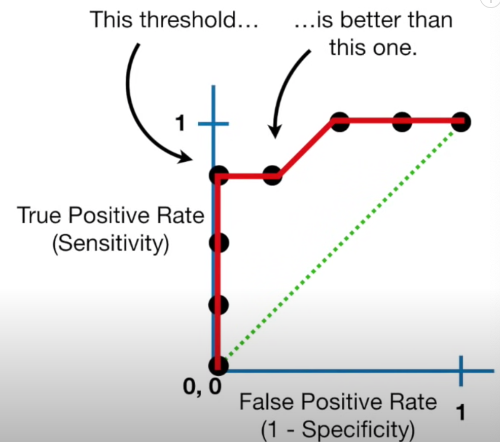

[ ROC curves ]

ROC curves - make it easy to identify the best threshold for making a decision

AUC

AUC - AUC can help to decide which categorization method is better

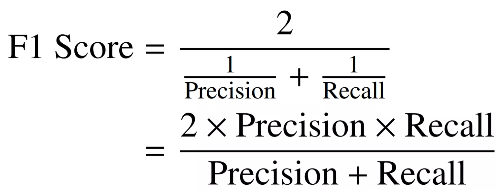

[ F1 Score ]

F1 Score : The higher the precision and recall, the higher the F1-score.

- F1-score ranges between 0 and 1.

- The closer it is to 1, the better the model.

=> compromise between Precision and Recall

F1 Score = 2 * Precision * Recall / (Precision + Recall)