Code&Data Insights

[Machine Learning] Generalization, Capacity, Overfitting, Underfitting 본문

[Machine Learning] Generalization, Capacity, Overfitting, Underfitting

paka_corn 2023. 11. 1. 08:57Generalization

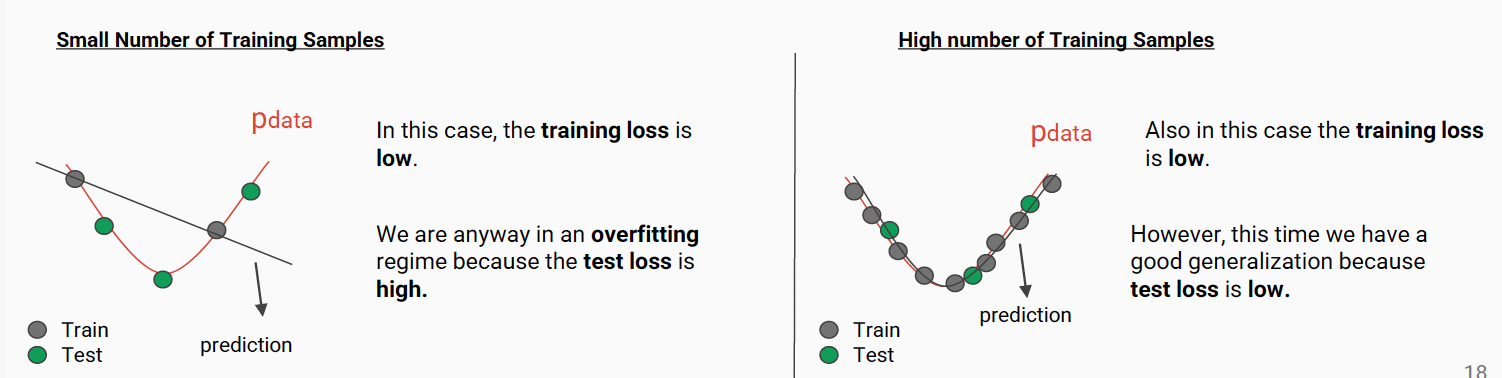

: ability of a machine learning algorithm(model) to perform well on unseen data

Training Loss

: loss function, computed with training set

Test Loss

: loss function, computed with test set

=> More training set leads better generalization!

Capacity

: Underfittingand Overfittingare connected to the capacity of the model.

capacity(= representational capacity)

: attempts to quantify how “big” (or “rich”) is the hypothesis space.

Larger Capacity : complex models(linear, exponential, sinusoidal, logarithmic functions)

Low Capacity : use linear functions only

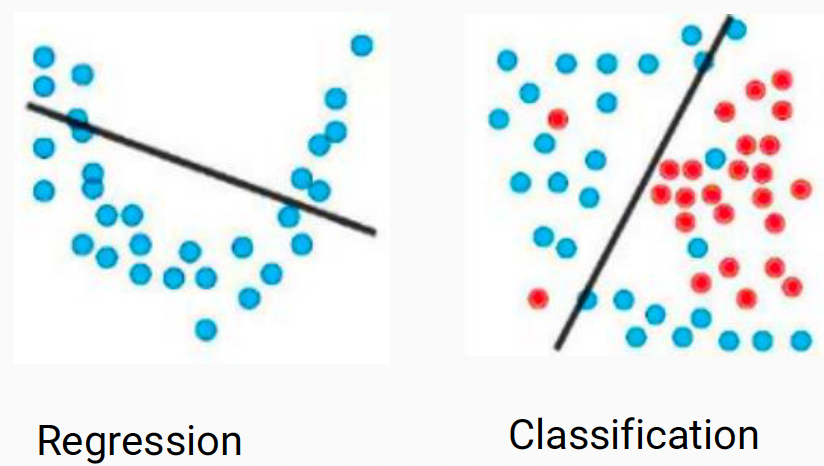

how well a model generalizes to unseen data?

-> Overfitting / Underfitting

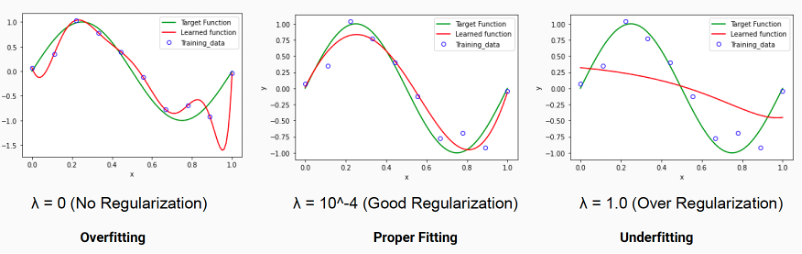

Underfitting

: machine learning algorithm is too simple to explain well the traning data

=> When the traning loss is high, and model can't achieve the low training loss.

=> Capacity is too low!

=> Low-dimensional data ( model is too simple )

=> Over Regularization

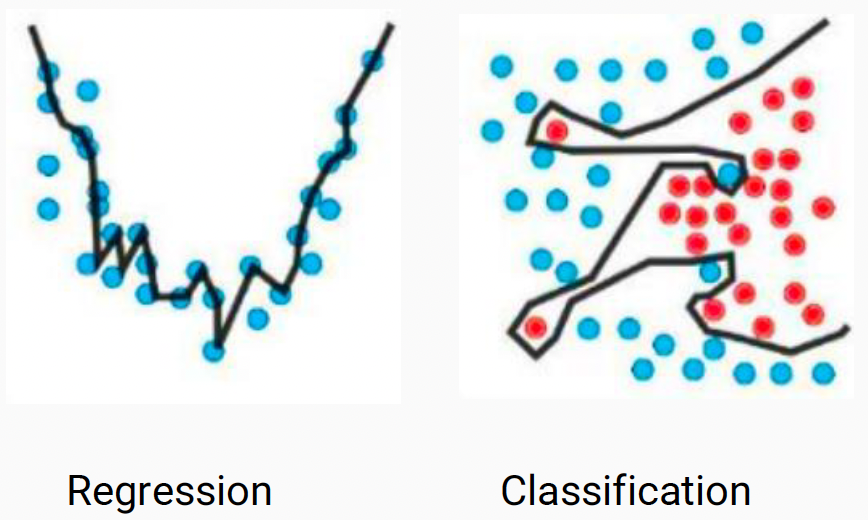

Overfitting

=> When the gap between the training and test losses is too high!

( performs well on traning, but not on test)

=> Capacity is too high!

=> High dimensional data

=> The number of training dataset is too few!

--> Mitigate Overfitting?

- Add more data(but, it’s too expensive in real machine learning problem)

- Use Regularization Methods for better generalization!