Code&Data Insights

[(COMP472)Artificial Intelligence] - Informed Search | Hill Climbing | Greedy Best-First Search | Algorithms A & A* 본문

[(COMP472)Artificial Intelligence] - Informed Search | Hill Climbing | Greedy Best-First Search | Algorithms A & A*

paka_corn 2023. 10. 24. 03:13< Informed Search (Heuristic Search) >

: we try to identify which nodes seems more Optimistic, and explore these first

- With heuristics (useful rules or empirical knowledge) or additional information is used to find the most efficient path.

- A way to visit the most promising nodes first

[ Heuristic ]

- A technique improves the efficiency of search

- Focus on nodes that seem most optimistic according to some function

- Need an estimate function -> heuristic function h(n)

-> No guarantee to be correct

-> Approximation of the lowest cost from node n to the goal

=> H(n) = estimate of the lowest cost from n to goal

=> Shorter search path, less backtracking

=> H(n) in goal state -> h(n) = 0

[ Types of Heuristic h(n) ]

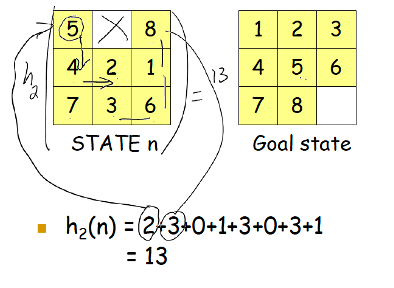

1) Hamming distance (NOT consider the empty tile) = misplaced numbered tiles

2) Manhattan distance

: sum up all the distances by which tiles are out of place

3) Sum of Permutation inversions

-> Actual cost(= h*(n)는 우리 모름, 뭐가 젤 좋은지는 생각하기 나름

-> H(3)가 actual cost에 가장 가까울 수 있음 (less backtracking)

-> Solution path many NOT be necessarily of lower cost

[ Hill Climbing ]

: Hill climbing : only use h(n)

h(n) : n to goal state = estimate of lowest cost of n to goal

- Possibility to stuck in local minima

- Open list X -> Can’t backtrack

- NOT use open list

- Good approximation – not guarantee to find the best solution but good solution

(1) Vanila Hill Climbing

- Take 1st successor s with better h() than current state n

- Good if the branching factor is HIGH!

(2) Steepest ascent hill climbing

- Generate all successor states S

- Run h(n) on all s belongs to S

=> Among all successors s, pick the best successor which has h(n)

[ Greedy Best-First Search ]

- USE open list, sorting in ascending order of value of h(n) 낮은거부터!

- Good approximation – not guarantee to find the best solution but good solution

- Use h(n), NOT g(n)

- Good approximation, but not guarantee the best

-> The quality of the approximation depends on the accuracy of the heuristic

- only use h(n) ( better version of Greedy BFS, not stuck in local minimum)

-> If meet the same node in Open or Closed List, ignore!

Propblems

- Can find the best path from local to local state(goal state)

- Can’t find the best path from the initial to goal state

- focus is on finding a good solution quickly rather than exploring the entire state space for the global optimum.

[ Algorithms A ]

: use f(n) to overcome the disadvantage of Greedy BFS( h(n) uses only, not the best cost since h(n) is an estimate of the lowest cost!

f(n) : root to the goal state ( f(n) = g(n) + h(n) )

F(n) = h(n) + g(n), total cost along path through n

G(n) = actual cost of path from start to node n

H(n) = estimate of cost to reach goal. From to

-> A not guarantee lowest cost

[ Algorithms A* ]

: better version of A algorithm, admissible, recalculate from the closed list ONLY!

=> A* guarantee lowest cost of solution path

=> Admissible! ( all node is h(n) < h*(n)

So is A* always “better” in real life?

- not necessarily

- computing g(n) can take time to compute

- Re-visiting CLOSED is costly

- if client is not looking for the optimal (lowest cost) solution

- a good-enough solution faster (i.e. GBF search) might be

preferable