Code&Data Insights

[Deep NLP] Attention | Transformer 본문

[Deep NLP] Attention | Transformer

paka_corn 2023. 12. 26. 04:27Attention

- Contextual embedding

=> Transform each input embedding into a contextual imbedding

=> Model learns attention weights

- Self-attention

: allows to enhance the embedding of an input word by including information about its context

- Encoder-Decoder Attention

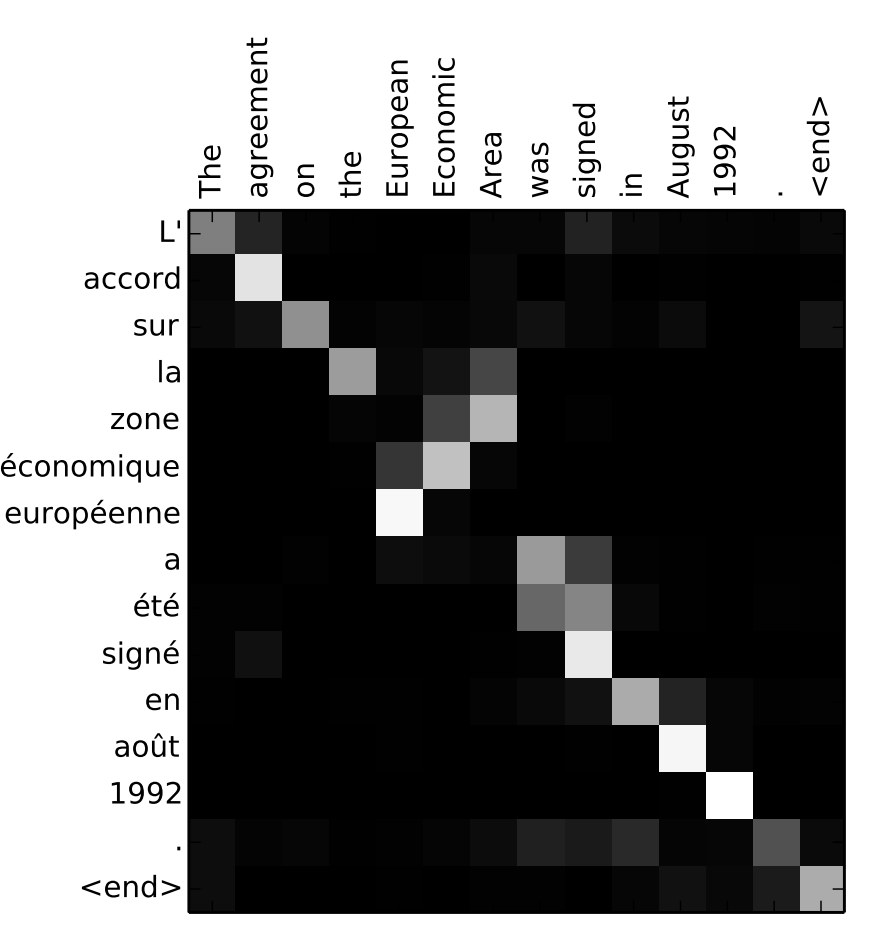

: Attention between words in the input sequence and words in the output sequence

=> how words from two sequences influence each other

Transformer

· Drawback of sequence models

(RNN, LSTM, GRU)

- Information Bottleneck

=> Context is a fixed vector

=> Short 5-word sentences a long 300 pages documents get encoded into the same fixed-size context vector

=> Not sufficient to capture all the information of long document

- Cannot be paralyzed

=> Words in the sequence are fed one after the other

=> Long to train, limits the size of the training data

· Transformer – ‘Attention is all you need’

: the architecture based only on the attention mechanism

- Improves the RNN/LSTM/GRU architectures by

=> Addressing the information bottleneck

=> Allowing feeding words to the model in a parallel fashion(not sequentially)

- Significant performance increase for long sequences

(better quality translation, summarisation)

- Faster training => training from larger datasets

'Artificial Intelligence > Natural Language Processing' 카테고리의 다른 글

| [Generative AI] Generative AI | Capabilities of Generative AI (0) | 2024.03.23 |

|---|---|

| [NLP] Large Language Model (LLM) (0) | 2024.03.23 |

| [Deep NLP] Word Embeddings | Word2Vec (1) | 2023.12.26 |

| [Statistical NLP] N-gram models (1) | 2023.12.26 |

| [Statistical NLP] Bag of Word Model (1) | 2023.12.26 |