Code&Data Insights

[Machine Learning] Dimensionality Reduction - Feature Extraction | PCA | LDA 본문

[Machine Learning] Dimensionality Reduction - Feature Extraction | PCA | LDA

paka_corn 2023. 6. 26. 09:28[ Dimensionality Reduction ]

Feature Selection

- a process in machine learning and data analysis where a subset of relevant features (variables or attributes) is selected from a larger set of available features.

- to improve the model's performance by reducing overfitting, improving interpretability, and enhancing computational efficiency.

Feature Extraction

- a process in machine learning and data analysis where new features (representations or descriptors) are derived or extracted from the existing set of features.

- aims to transform the original features into a new set of features that capture essential information and patterns.

=> Techniques : PCA | LDA | Kernel PCA

(ex) Recommend system

[ Principal Component Analysus(PCA) ]

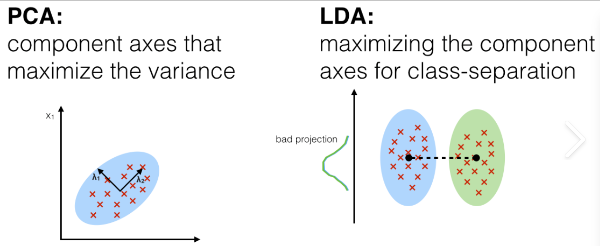

PCA : reduce the dimensions of a d-dimensional dataset by projecting it onto a (k)-dimentional subspace(where k<d)

=> Unsupervised

- Identify patterns in data

- Detect the correlation between variables(features)

Covariance

Covariance is a computational stepping stone to something that is interesting, like correlation.

=> Covariance is a way to classify 3-type of relationship.

1) Positive trend : covariance > 0

2) Negative trend : covariance < 0

3) No trend : covariance = 0

* Covariance is difficult to scale -> It's difficult to know how slope it's slope depending on the coviance value is larger or not => Correlation is the relationship which is not sensitive to the scale of the data.

[ Linear Discriminant Analysis (LDA) ]

LDA : project a feature space(a dataset n-dimensional samples) onto a small subspace k (where k<= n-1) while maintaining the class-discriminatory information

=> Supervised

- Used as a dimensionality reduction techinique

- Used in the pre-processing step for pattern classification